Ulsan National Institute of Science and Technology (UNIST) announced that a collaborative research team led by Professor Choi Moon Ki from the Department of Materials Science and Engineering at UNIST, Dr. Choi Chang Soon from the Korea Institute of Science and Technology (KIST), and Professor Kim Dae-hyung from Seoul National University, has developed a groundbreaking robotic vision sensor inspired by brain synapses.

Vision sensors serve as the eyes of machines, capturing visual information and relaying it to processors that function like the brain.

If information is transmitted without filtering, it can result in increased data volume, slower processing speeds, and reduced recognition accuracy due to the inclusion of unnecessary data.

These issues are particularly pronounced in environments with sudden lighting changes or areas that contain both bright and dark elements.

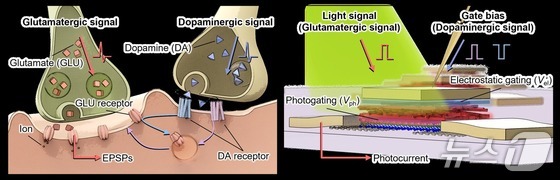

The collaborative research team developed a vision sensor that mimics the dopamine-glutamate signaling pathway found in brain synapses, enabling selective detection of high-contrast visual information, such as contour outlines.

In the brain, dopamine reinforces important information by regulating glutamate, a mechanism that the team’s sensor design mimics.

“By integrating in-sensor computing technology that provides the eye with certain brain functions, the system can automatically adjust image brightness and contrast while filtering out unnecessary data,” explained Professor Choi Moon Ki. “This fundamentally reduces the burden on robotic vision systems that need to process tens of gigabits of image data per second.”

Test results show the new vision sensor reduced image data transmission by approximately 91.8% while increasing object recognition simulation accuracy to approximately 86.7%.

The sensor utilizes a phototransistor whose current response varies with the gate voltage. This gate voltage acts like dopamine in the brain by regulating response intensity, while the phototransistor’s current output simulates glutamate signal transmission.

Adjusting the gate voltage enhances light sensitivity, allowing for the detection of clear contour outlines even in low-light conditions.

The sensor’s design allows output current to vary based on both absolute light intensity and relative brightness differences. This results in stronger responses to high-contrast areas, such as outlines, while suppressing uniform backgrounds.

Dr. Choi Chang Soon from KIST emphasized that the technology can be applied to various vision-based systems, including robotics, autonomous vehicles, drones, and Internet of Things (IoT) devices. He also noted that by enhancing both data processing speed and energy efficiency, it could become a cornerstone of next-generation AI vision technology.

The research was supported by the National Research Foundation of Korea’s Excellent Young Researcher Program, KIST’s Future Source Semiconductor Technology Development Project, and the Institute for Basic Science.

The findings were published online in the international academic journal Science Advances on May 2.