A new AI technology has been developed to reconstruct 3D scenes of two-handed manipulation of unfamiliar objects.

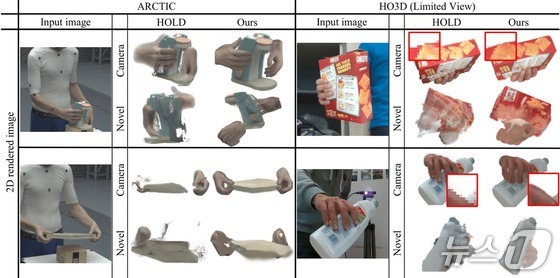

On June 9, Ulsan National Institute of Science and Technology (UNIST) announced that Professor Baek Seung Ryul’s team from the Graduate School of Artificial Intelligence developed an AI model called BIGS (Bimanual Interaction 3D Gaussian Splatting). This model can visualize complex interactions between both hands and previously unseen tools in real-time 3D using only a single RGB video.

Because the AI receives only 2D data captured by a camera, reconstructing the actual positions and three-dimensional shapes of hands and objects requires converting this data back into 3D.

Previous technologies were limited to recognizing only one hand or handling pre-scanned objects, which restricted their ability to recreate interactive scenes in AR or VR realistically.

The BIGS model can reliably predict the entire shape even when hands are partially hidden or overlapping.

It also naturally reconstructs unseen parts of new objects by leveraging learned visual information.

Moreover, this reconstruction is possible with just a single RGB video from one camera—without requiring depth sensors or multiple camera angles—making it easy to apply in practical settings.

The AI model is based on 3D Gaussian Splatting.

Gaussian Splatting represents object shapes as diffusing point clouds, allowing for a more natural restoration of contact surfaces between hands and objects, unlike pixel-based point clouds, which have sharp boundaries.

While overlapping or hidden hands make shape estimation difficult, the team overcame this by aligning all hands to a standard hand structure, known as the Canonical Gaussian.

They also applied a score distillation sampling (SDS) method using a pre-trained diffusion model to reconstruct the unseen backs of objects.

Testing with international datasets such as ARCTIC and HO3Dv3 showed that BIGS outperformed existing methods in restoring hand poses, object shapes, and hand-object contact information, as well as rendering quality.

The research was led by UNIST researcher Jeongwan On as the first author, with co-researchers Kwak Kyung Hwan, Kang Geun Young, Cha Jin Wook, Hwang Soo Hyun, and Hwang Hye In.

Professor Baek said, “This research is expected to be applied in real-time interaction reconstruction technologies for various fields such as virtual reality (VR), augmented reality (AR), robotic control, and remote surgery simulation.”

The study was accepted for presentation at the CVPR 2025 conference, held in the United States from June 11 to June 15. CVPR is a leading conference in computer vision.

This research was supported by the Ministry of Science and ICT, the National Research Foundation of Korea, and the Institute for Information and Communications Technology Planning and Evaluation.