Researchers have developed a groundbreaking artificial intelligence (AI) model that can transform blurry, choppy video footage into crisp, clear images.

The Ulsan National Institute of Science and Technology (UNIST) announced on Monday that a team led by Professor Jaejun Yoo from the Graduate School of Artificial Intelligence has created an innovative AI model, BF-STVSR (Bidirectional Flow-based Spatio-Temporal Video Super-Resolution). This cutting-edge technology simultaneously enhances both video resolution and frame rates.

The research team emphasizes that resolution and frame rate are crucial elements in determining video quality.

Higher resolution results in sharper images with more defined details, while increased frame rates smooth out motion, reducing choppiness.

Traditional AI video restoration techniques typically address resolution and frame rates as separate issues, relying on pre-trained optical flow prediction networks for frame enhancement.

The optical flow method, which calculates the direction and speed of object movement to generate intermediate frames, is computationally intensive and prone to cumulative errors, thereby limiting both the speed and quality of video restoration.

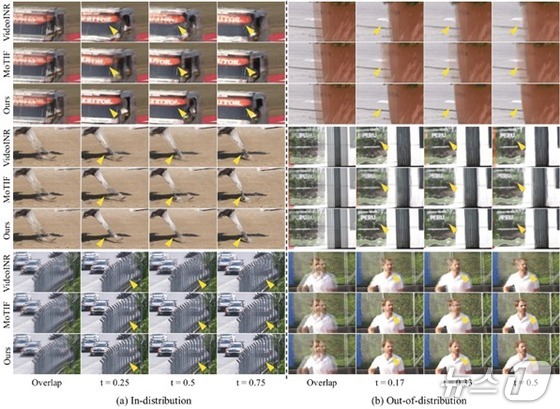

In contrast, BF-STVSR introduces signal processing techniques tailored explicitly for video characteristics. This allows the model to independently learn bidirectional motion between frames without relying on external optical flow prediction networks.

By inferring object contours based on this flow, the model can simultaneously improve both resolution and frame rates.

When applied to low-resolution, low-frame-rate videos, this AI model outperformed existing models in quality metrics, including PSNR and SSIM.

Higher PSNR and SSIM values indicate that even in videos with significant motion, the model can restore human figures naturally without distortion or degradation.

Professor Yoo highlighted the model’s versatility, stating that the technology can swiftly enhance not only low-quality CCTV and dashcam footage but also compressed streaming videos. Its applications span various fields, including media content production, medical image analysis, and virtual reality (VR) technology.

The research was led by Eunjin Kim as the primary author, with Hyunjin Kim contributing as a co-author.

The groundbreaking study has been accepted for presentation at the 2025 CVPR (Conference on Computer Vision and Pattern Recognition), a leading international conference in the field of computer vision.