OpenAI is set to launch a new computationally intensive artificial intelligence (AI) model.

Given the substantial computational resources invested in enhancing this model’s performance, the company has signaled a premium service strategy that will include additional fees on top of its already high-priced plans.

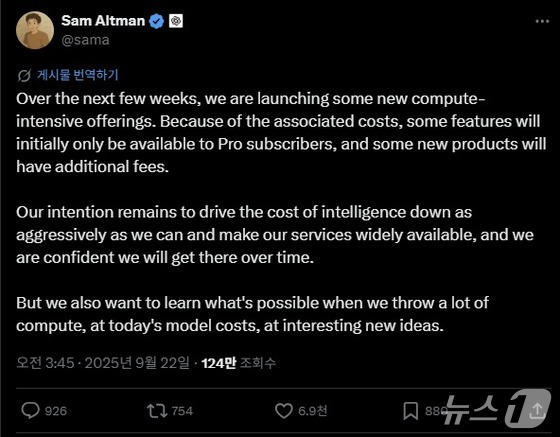

Sam Altman, Chief Executive Officer (CEO) of OpenAI, announced on his X (formerly Twitter) account on September 21 that they plan to unveil a new computationally intensive service in the coming weeks. He noted that due to cost considerations, some features will be exclusive to pro subscribers, while others may incur extra charges.

The current ChatGPT Pro subscription is priced at 200 USD.

Altman emphasized that its ultimate goal remains to minimize costs and make the ChatGPT service widely accessible, and he’s confident they’ll achieve this over time. He added, however, today they’re excited to explore the potential of ideas that leverage significant computational resources.

Recently, the AI tech industry has been focusing on developing low-cost, high-efficiency solutions.

Analysts view Altman’s announcement as a strategic response to user feedback suggesting that the recently released GPT-5 didn’t deliver the dramatic performance improvements some had anticipated.

Some experts interpret this move as an attempt to overcome the limitations of the scaling laws in AI development.

The scaling laws posit that performance improves proportionally with increases in model size, data volume, and computing power. However, critics argue that the benefits of this scaling approach have reached a plateau.

While techniques like test-time compute, which extend inference time to boost performance, are gaining traction, they still show limitations in areas where the model lacks training data.