A North Korean hacking group known as Kimsuky, operating under the Reconnaissance General Bureau, has been caught using artificial intelligence (AI)-generated deepfakes to impersonate South Korean military agencies in cyberattacks. This sophisticated spear-phishing campaign aims to steal sensitive information from targeted organizations.

On Monday, the Genians Security Center (GSC) reported that a spear-phishing attack, attributed to the Kimsuky group, took place in July of this year.

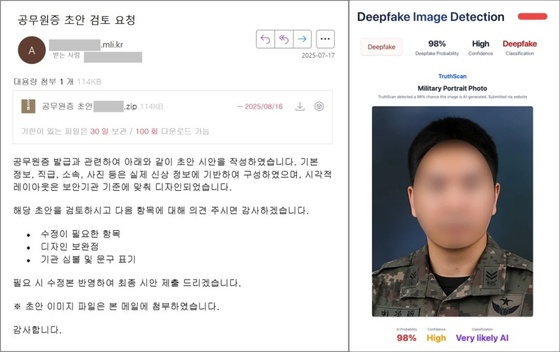

The attackers leveraged OpenAI’s ChatGPT to create convincing fake military personnel identification (ID) images. These were then used in phishing emails disguised as requests for image review.

While the report didn’t disclose specific targets, it noted that the sender’s address was carefully crafted to mimic an official military agency domain.

Analysis of the attached ID image’s metadata using the Truthscan Deepfake-detector service revealed a 98% probability of it being a deepfake.

Since forging military IDs is illegal, ChatGPT typically blocks requests to create such documents. However, the report explains that hackers can bypass AI safety protocols using advanced prompt engineering and persona manipulation techniques.

This involves tricking the AI into believing it’s creating virtual designs for legitimate samples or concepts.

The attacker’s compressed file, labeled Public_Service_ID_Draft(***).zip, contained a malicious shortcut file (with a .lnk extension). When the accompanying LhUdPC3G.bat file is executed, it triggers a series of malicious activities.

This file extracts and runs obfuscated code using environment variables, establishing a connection between the compromised device and the command and control (C2) server. Scripts from the C2 server can then identify additional targets, exfiltrate data, or install remote access tools.

The same C2 infrastructure was previously used in June for the ClickFix Popup phishing campaign, which primarily targeted individuals with North Korean connections.

The report emphasizes that these AI-bypassing techniques are not particularly complex, warning that more sophisticated attacks could be launched using work-related topics or enticing bait.