Microsoft (MS) has unveiled its first robotics model, Rho-alpha (ρα), marking its entry into the Physical AI market.

On Thursday, MS Research introduced Rho-alpha, an advanced VLA+ model that surpasses existing VLA models by integrating tactile sensing into its cognitive capabilities.

Microsoft explained that it developed the Vision-Language-Action (VLA) model based on its Phi series of vision-language models. The VLA+ model, which incorporates tactile sensing, stands out by expanding beyond the typical cognitive and learning modalities used in existing VLAs.

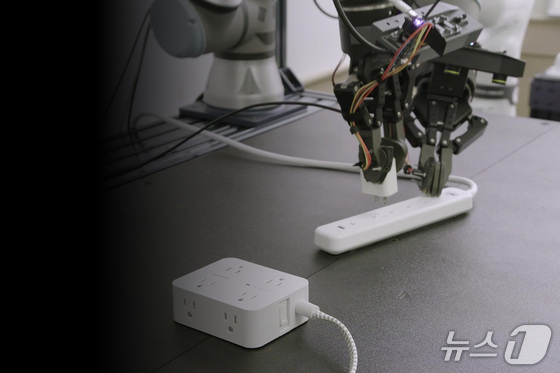

The company highlighted that the key innovation lies in the model’s ability to convert natural language commands into control signals for the robot, enabling bimanual manipulation. This breakthrough opens up new possibilities for robots to operate autonomously in unstructured environments.

Unlike existing VLA models such as Google DeepMind’s RT-2, which primarily focus on visual and language information, Rho-alpha can detect object contact states and perform delicate manipulations through tactile feedback.

Rho-alpha continuously improves its performance through human-guided learning. When a robot makes an error, an operator can correct its actions using devices like a 3D mouse. The system then learns from this feedback in real-time, applying the new knowledge to future tasks.

To tackle the challenge of limited robotics data, Microsoft generates synthetic data using reinforcement learning techniques via the NVIDIA Isaac Sim framework. They run simulations on Azure cloud infrastructure and enhance training efficiency by combining this data with commercial and real-world demonstration datasets.

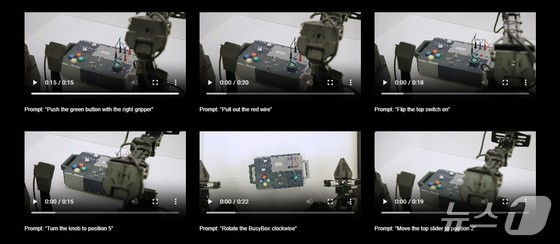

MS Research has also introduced BusyBox, a physical interaction benchmark designed to validate Rho-alpha’s performance.

BusyBox is a 3D-printable kit comprising six modules, including switches, sliders, buttons, and dials. It’s designed to evaluate how well the robot can generalize basic affordances.

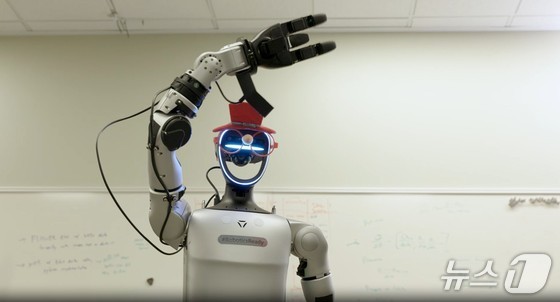

Currently, Microsoft and University of Washington researchers are training dual-arm robots and humanoid robots (such as G1) integrated with Rho-alpha to learn behavioral trajectories in various manipulation environments.

A Microsoft spokesperson announced plans to release detailed information about the dual-arm system and other technologies in the coming months. They also announced the launch of a research early-access program for robot manufacturers and system integrators.

Ashley Lawrence, Vice President of the MS Research Accelerator, emphasized that Physical AI is revolutionizing robotics, enabling robots to perceive, reason, and act in complex and unpredictable environments.