OpenAI has once again delayed the release of its open-source model, which was scheduled to launch next week.

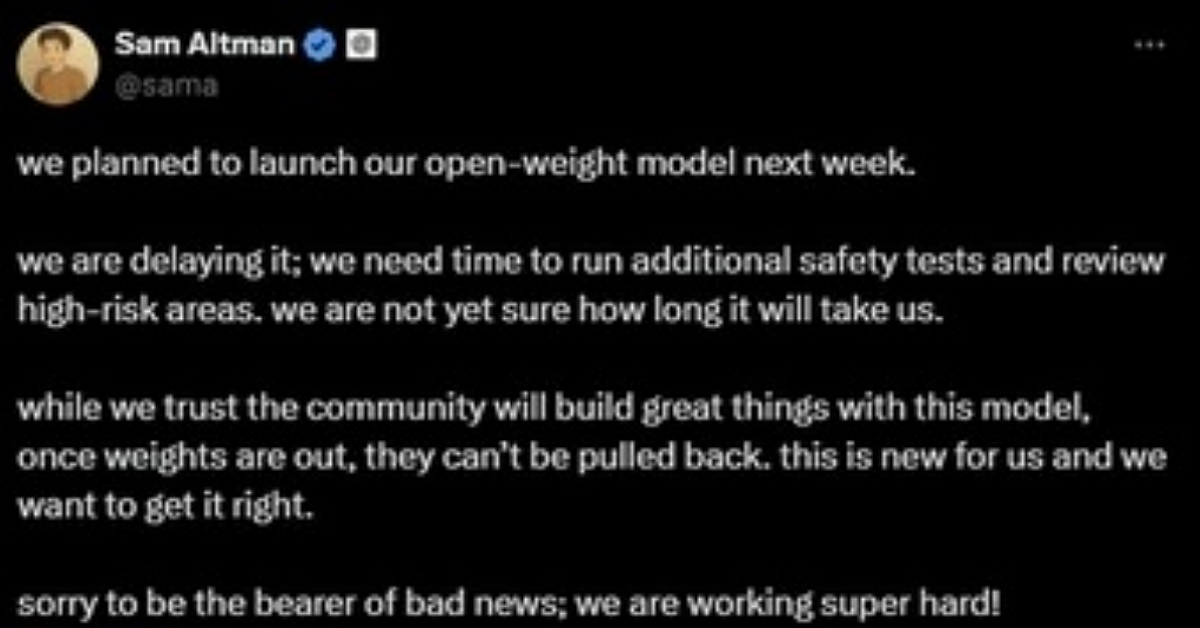

Sam Altman, Chief Executive (CEO) of OpenAI, took to X on July 12 to announce that the company is conducting additional safety tests on the model. He stated they need more time to review high-risk areas, adding that they’re uncertain about the exact timeline for completion.

Altman explained that they’re exercising caution because once the model is released, it’s impossible to retract the weights. He apologized for the disappointing news, assuring the public that the team is working tirelessly on this project.

This marks the second postponement of the open model’s release, following a similar delay last month.

Aidan Clark, OpenAI’s Vice President of Research, elaborated on the situation. He noted that while the open-source model demonstrates excellent performance, the company has set high standards for its release. Clark emphasized the need for additional time to ensure they can proudly unveil a model that excels in all aspects.

The launch of OpenAI’s open model has been one of the most anticipated artificial intelligence (AI) developments this summer, alongside the release of the next-generation integrated model, GPT-5.

The timeline for GPT-5’s release has also been pushed back. Initial reports suggested a May launch for GPT-5. Still, OpenAI later announced on their official YouTube channel that the model would be released this summer, contingent on meeting internal safety benchmarks.

Industry experts speculate that the delay in rolling out OpenAI’s new model stems from multiple factors. These include the model’s failure to demonstrate significant performance improvements comparable to the leap from GPT-3 to GPT-4, as well as safety concerns amid intensifying competition in the AI field.

Furthermore, analysts point to a combination of challenges, including the limitations of high-quality data training methods that have previously driven performance gains, and financial constraints related to data center and computing infrastructure costs.